Meet the Author

Phillip Carter

Author

Phillip is a Product Manager by day, and OSS developer in the evenings. He's been working on developer tools and experiences his entire career, building everything from compilers to high-level IDE tooling. Now, he's working out how to give developers the best experience possible with observability tooling. When not at the computer, you can find Phillip on a mountain hiking or snowboarding, and increasingly in the water doing other board-related sport activities.

Explore Author's Blog

LLMs Are Weird Computers

The common definition of a computer is a programmable machine that stores, retrieves, and processes data. I would argue that ChatGPT already fits this definition, as you can control how it responds with prompts (programs), it can store data you pass it (memory), it can search that data to generate a response (RAG), and it can process inputs to produce a response (inference).

How I Code With LLMs These Days

I first started using AI coding assistants in early 2021, with an invite code from a friend who worked on the original GitHub Copilot team. Back then, the workflow was just single-line tab completion, but you could also guide code generation with comments and it’d try its best to implement what you want.

Three Properties of Data to Make LLMs Awesome

Back in May 2023, I helped launch my first bona fide feature that uses LLMs in production. It was difficult in lots of different ways, but one thing I didn’t elaborate in several blog posts was how lucky I was to have a coherent way to get the data I needed to make the feature useful for users.

Action Recommended: OpenTelemetry HTTP Attributes Breaking Changes

Earlier this year, the folks working on OpenTelemetry launched an effort to stabilize HTTP Semantic Conventions. In November 2023, OpenTelemetry announced that HTTP Semantic Conventions were stable. They accomplished this by merging the existing HTTP Semantic Conventions with the Elastic Common Schema HTTP attribute conventions.

So We Shipped an AI Product. Did it Work?

Like many companies, earlier this year we saw an opportunity with LLMs and quickly (but thoughtfully) started building a capability. About a month later, we released Query Assistant to all customers as an experimental feature. We then iterated on it, using data from production to inform a multitude of additional enhancements, and ultimately took Query Assistant out of experimentation and turned it into a core product offering. However, getting Query Assistant from concept to feature diverted R&D and marketing resources, forcing the question: did investing in LLMs do what we wanted it to do?

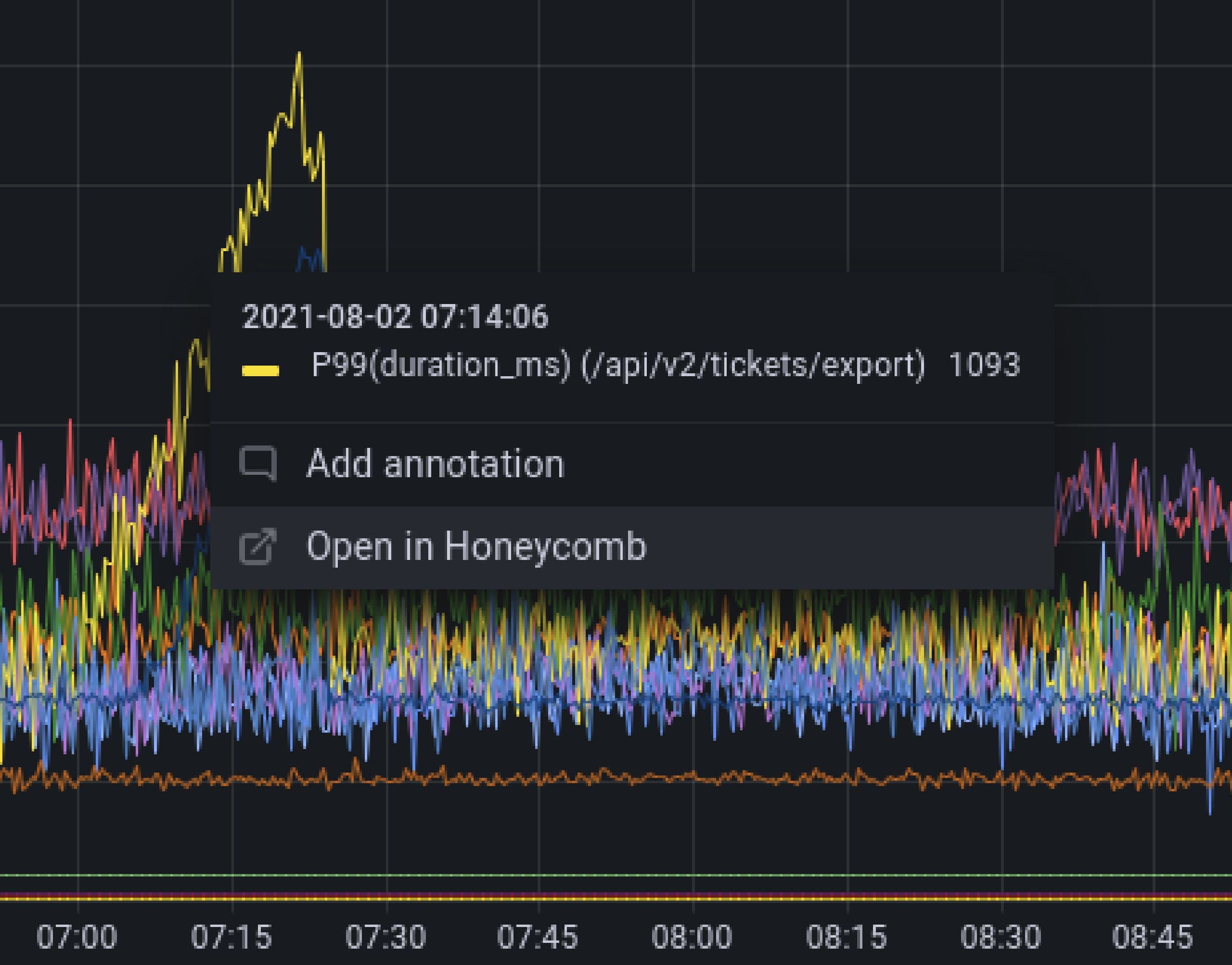

Improving LLMs in Production With Observability

In early May, we released the first version of our new natural language querying interface, Query Assistant. We also talked a lot about the hard stuff we encountered when building and releasing this feature to all Honeycomb customers. But what we didn’t talk about was how we know how our use of an LLM is doing in production! That’s what this post is all about.

All the Hard Stuff Nobody Talks About when Building Products with LLMs

There’s a lot of hype around AI, and in particular, Large Language Models (LLMs). To be blunt, a lot of that hype is just some demo bullshit that would fall over the instant anyone tried to use it for a real task that their job depends on. The reality is far less glamorous: it’s hard to build a real product backed by an LLM.

Observability, Meet Natural Language Querying with Query Assistant

Engineers know best. No machine or tool will ever match the context and capacity that engineers have to make judgment calls about what a system should or shouldn’t do. We built Honeycomb to augment human intuition, not replace it.

OpenTelemetry Logs, OpenTelemetry Go, and the Road Ahead

We’ve got a lot of OpenTelemetry-flavored honey to send your way, ranging from OpenTelemetry SDK distribution updates to protocol support. We now support OpenTelemetry logs, released a new SDK distribution for OpenTelemetry Go, and have some updates around OpenTelemetry + Honeycomb to share. Let’s see what all the buzz is about this time!

An Observability Guide From Someone with a Precarious Grasp on the Topic

I’m Phillip, a product manager here at Honeycomb. After eleven-ish months of working on our product, I totally understand observability, right? …Kinda? Sorta? Maybe? I’m not sure—but, I have been sitting in this space…