Debug LLM applications with confidence

Generative AI is powerful but unpredictable. With Honeycomb, you get granular insight into how your LLMs behave in production, troubleshoot failures faster, and continuously improve model performance—all in real time with real data.

AI is a black box in production

Building with generative AI is an art. A single prompt tweak can lead to wildly different responses, making debugging difficult.

Testing Limitations

Traditional testing methods are ineffective for AI-driven systems, requiring new approaches to evaluate performance.

Unpredictable Failures

Small changes in prompts or models can introduce unexpected issues and unpredictable failures.

Debugging Challenges

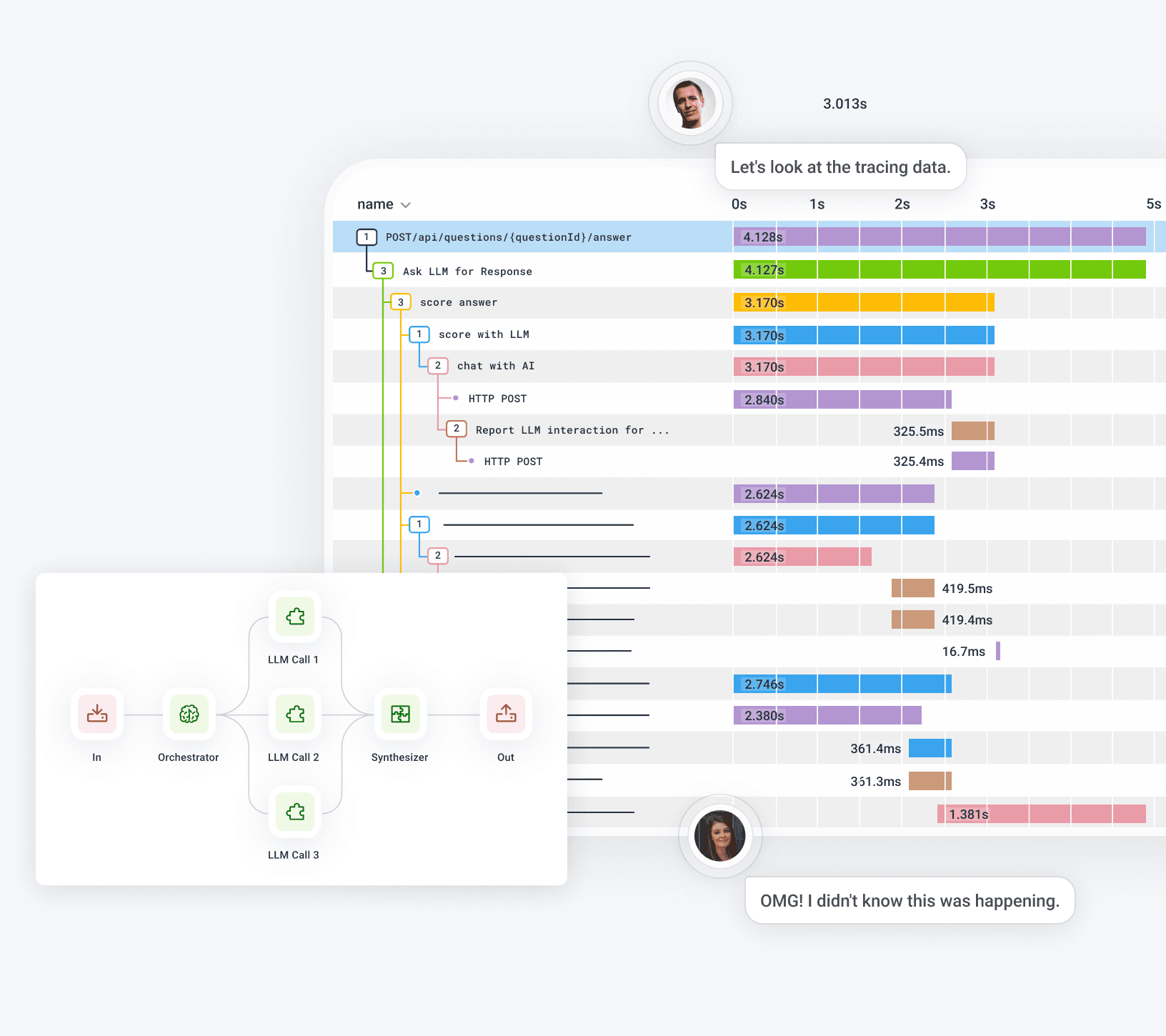

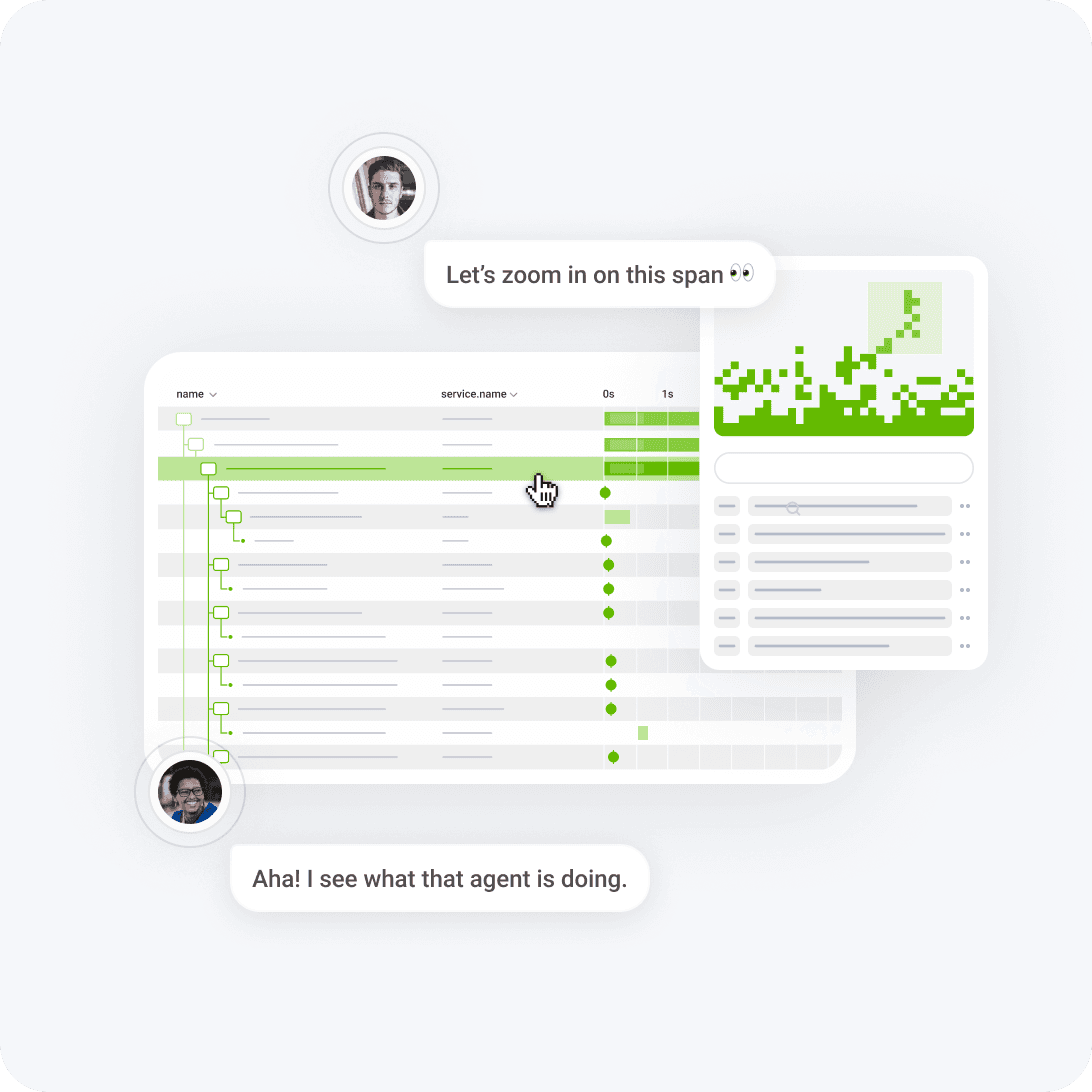

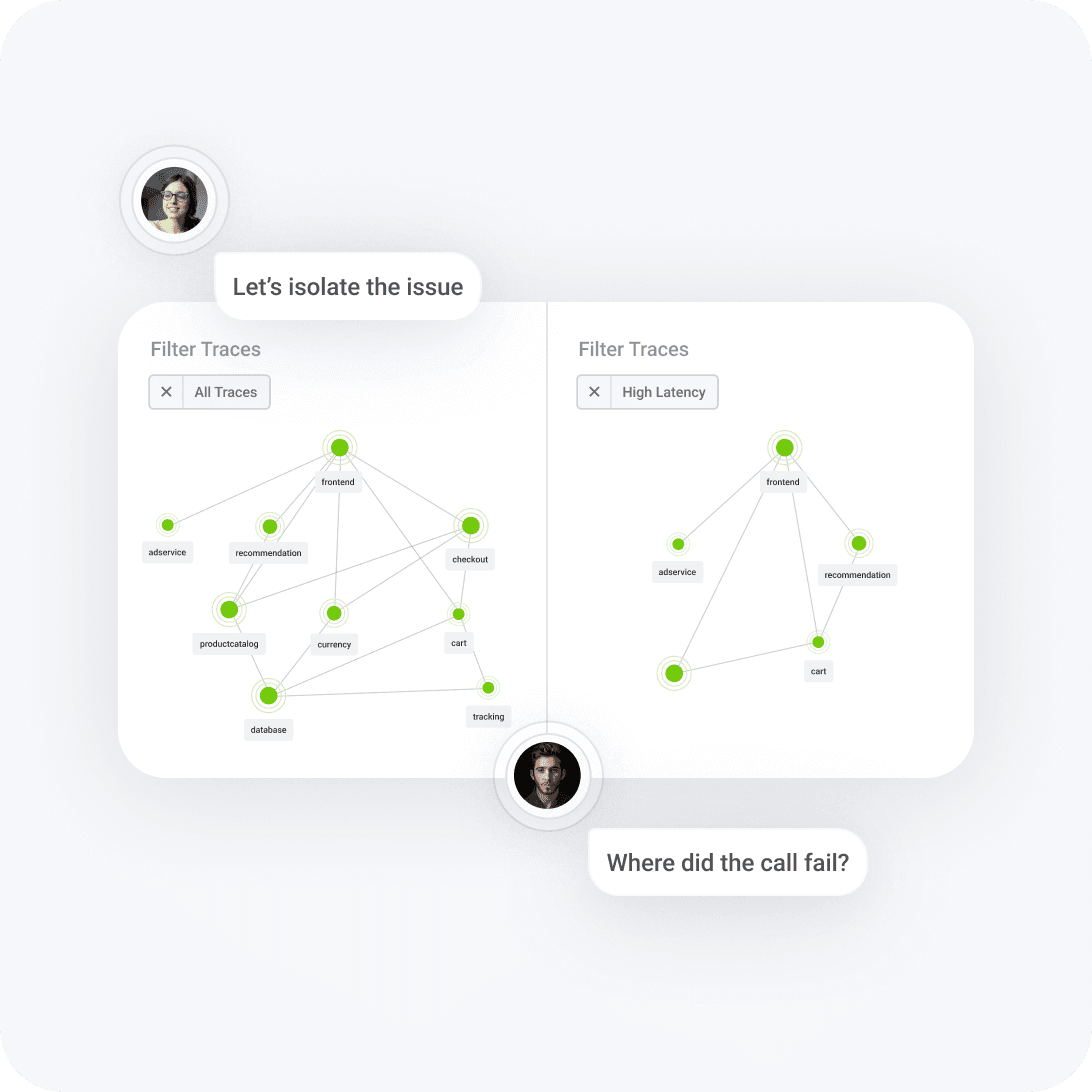

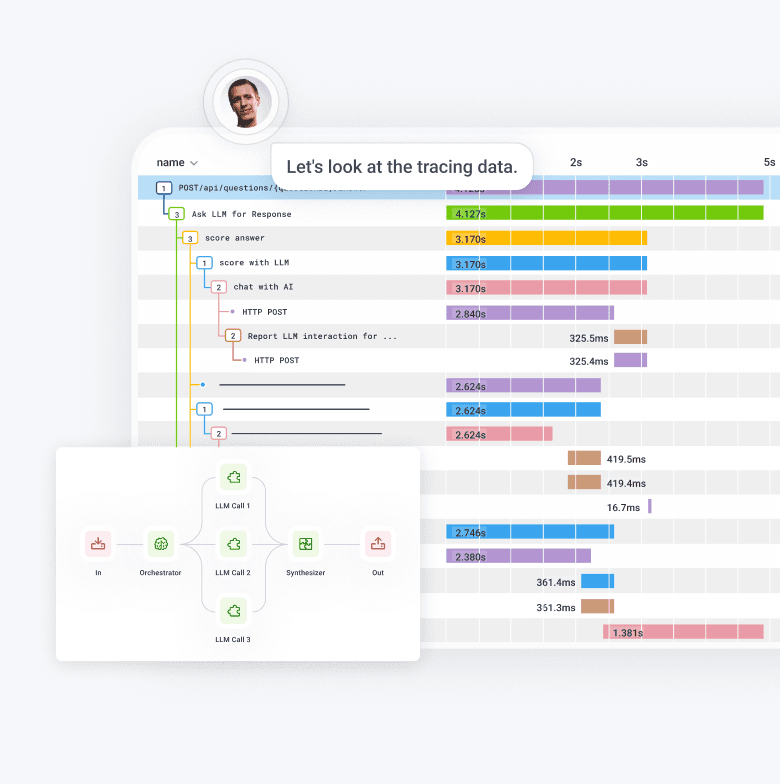

Tracing LLM execution is difficult, making it challenging to pinpoint when and why errors occur.

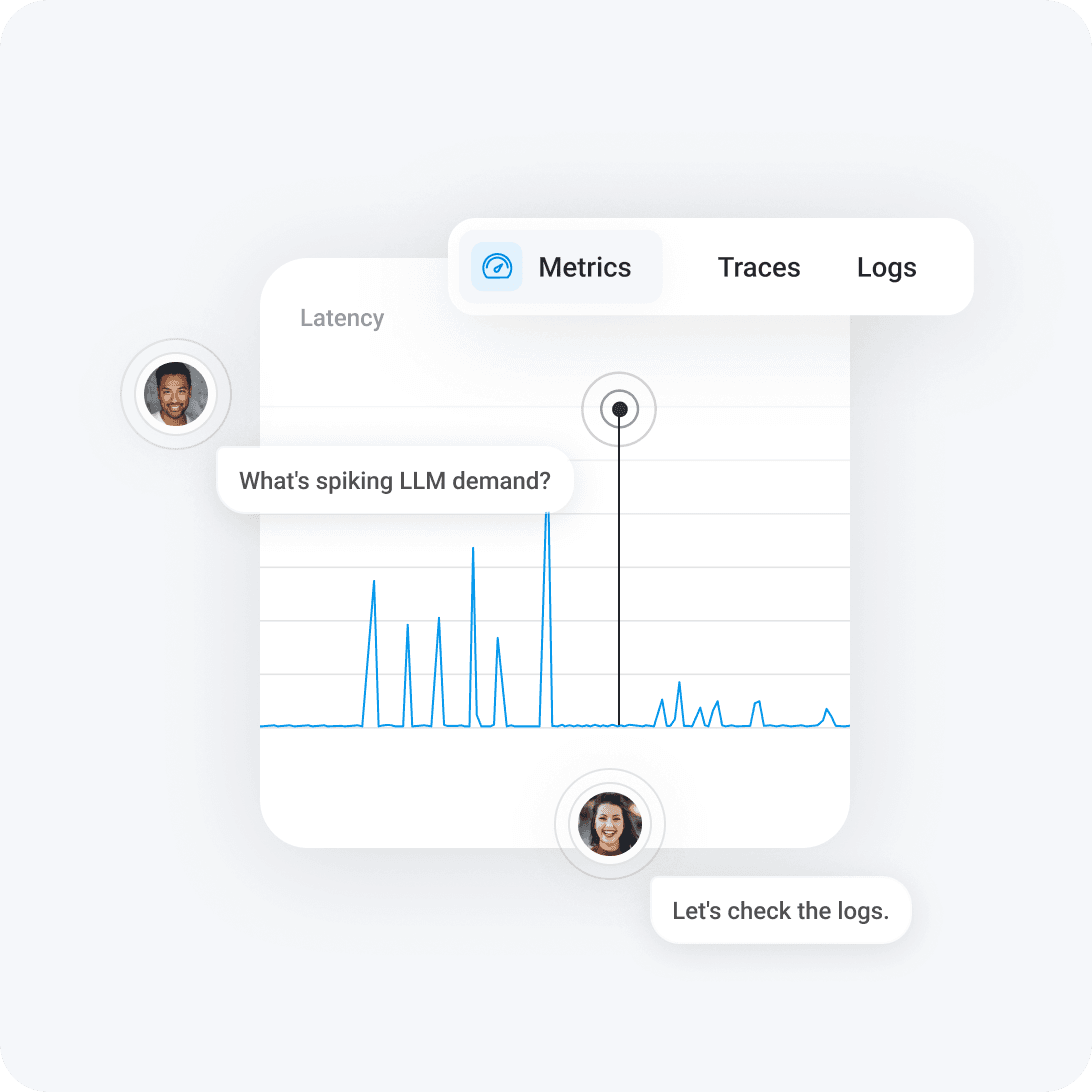

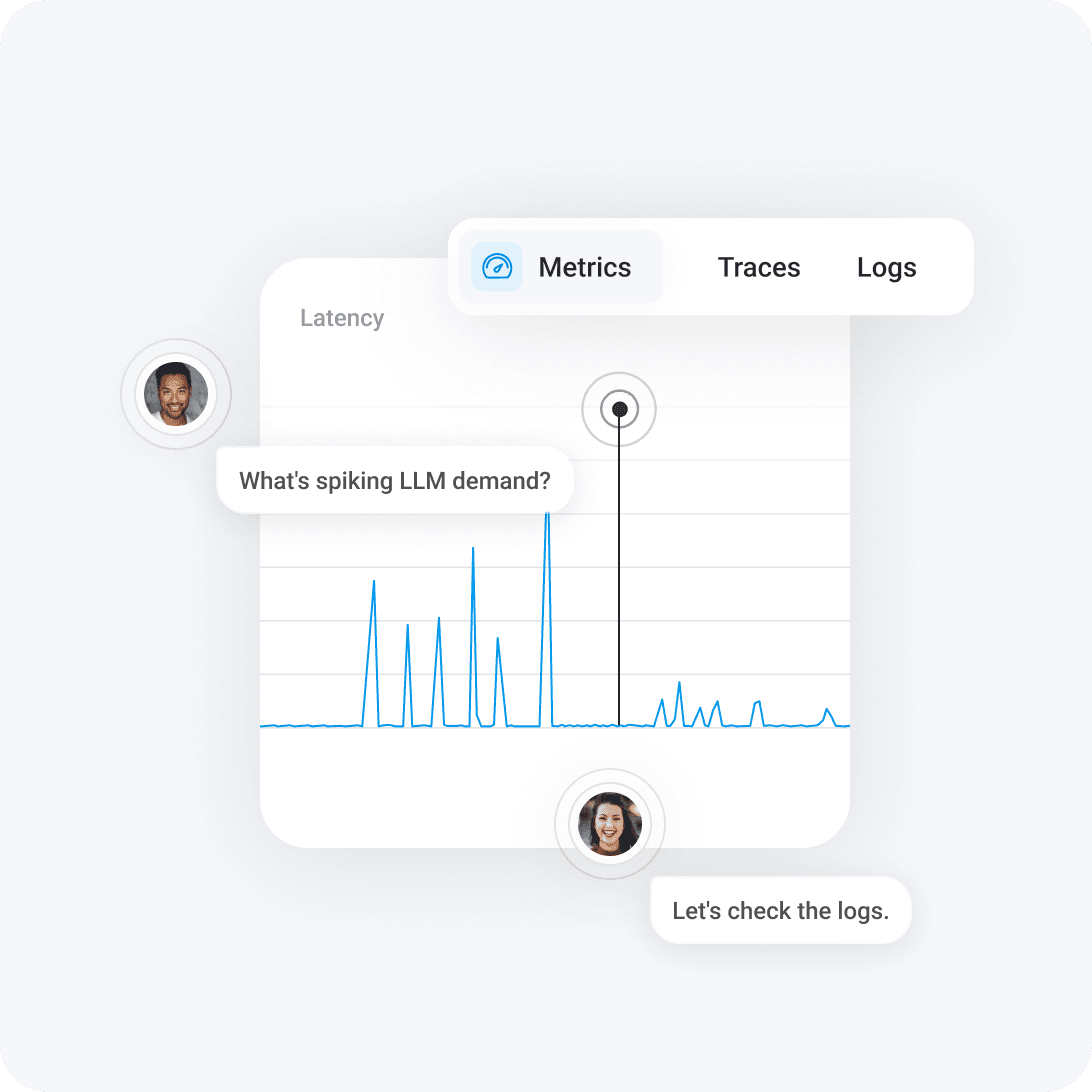

Powerful, granular observability into LLMs

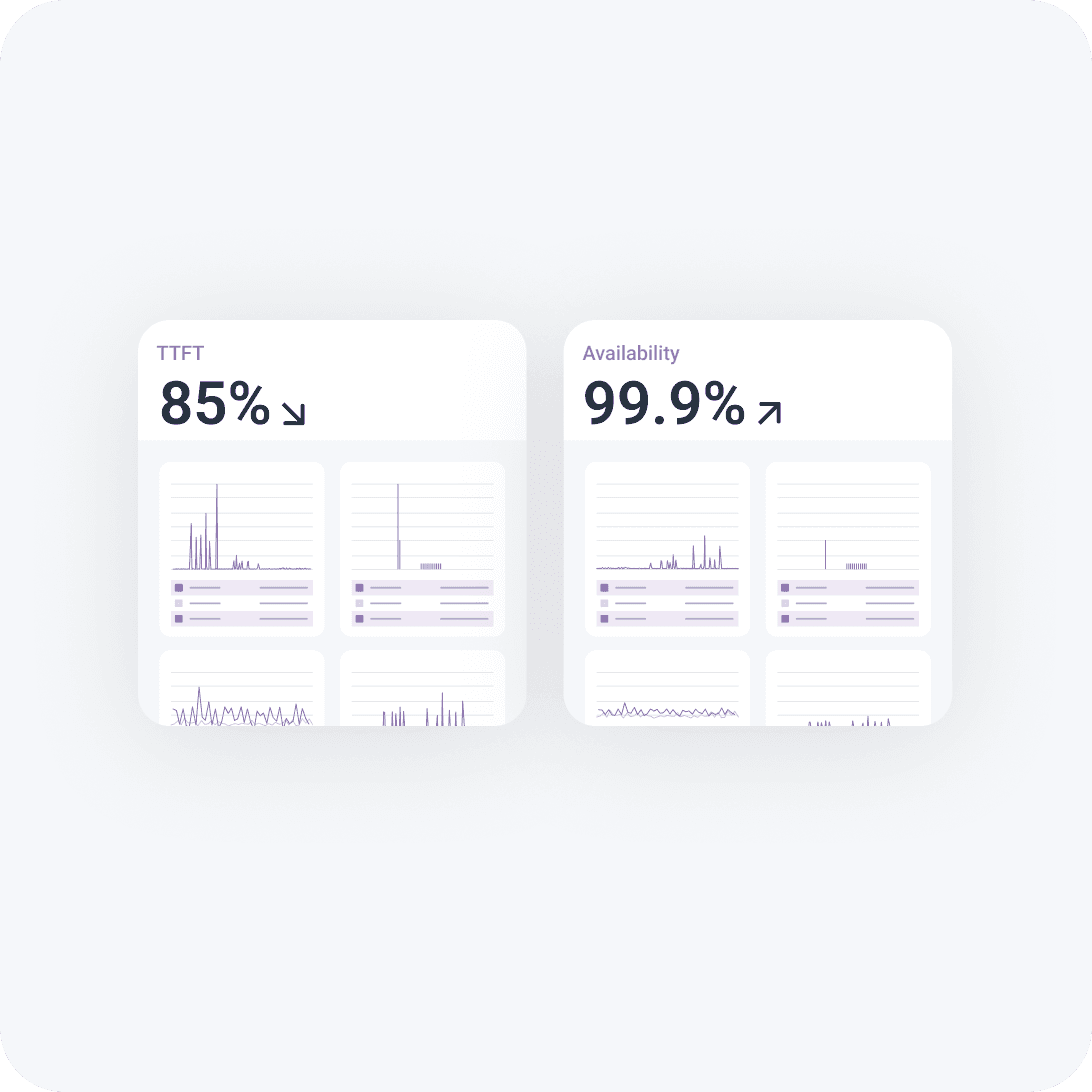

Honeycomb helps pinpoint issues, reduce hallucinations, and improve the quality of AI-powered applications. Understand system behavior as a whole, identify performance bottlenecks, and optimize your AI evals with real-world data.

Trusted by innovators and enterprises worldwide

Thanks to Honeycomb, SLO-based monitoring has proven superior for LLM reliability than traditional metrics. Given AI’s unpredictable nature, SLOs help us catch and investigate anomalies without triggering a flood of noisy alerts.

Related features

Discover our full suite of features, giving you everything you need to easily solve problems.

Experience the power Honeycomb

Jump into our sandbox and start exploring—no signup required.

Want to know more?

Talk to our team to arrange a custom demo or for help finding the right plan.